Vertex AI Infrastructure for MLOps on Google Cloud Platform

Google Cloud Platform (GCP) offers a suite of powerful tools and services for building, training, and deploying machine learning models. One of these tools is Vertex AI, a fully managed platform for building and deploying machine learning models at scale.

Vertex AI simplifies the process of training, evaluating, and deploying machine learning models. With Vertex AI, users can easily build, train, and deploy machine learning models using a simple, intuitive interface. The platform also offers a range of pre-built models and algorithms, as well as a comprehensive set of tools and services for data preprocessing, feature engineering, model evaluation, and more.

One of the key advantages of Vertex AI is its ability to scale. The platform is designed to handle large amounts of data and complex machine learning workloads, making it ideal for enterprise-level machine learning applications. Vertex AI also offers automatic scaling and optimized performance, so users can focus on building and deploying their models without worrying about the underlying infrastructure.

Another benefit of Vertex AI is its integration with other GCP services. The platform integrates seamlessly with other GCP tools and services, such as BigQuery for data storage and analysis, Cloud Storage for data storage and retrieval, and TensorFlow for machine learning model training and deployment. This allows users to easily build end-to-end machine learning pipelines, from data preparation and model training to deployment and monitoring.

Previously, users who wanted to run Kubeflow Pipelines on GCP had to create a GKE cluster and manage it themselves. This required a certain level of technical expertise and could be time-consuming and complex. With Vertex AI, however, users can simply write their Pipelines using the Kubeflow Pipelines Domain-Specific Language (DSL) and let Vertex AI handle the underlying infrastructure.

Vertex AI employs a serverless approach to running Pipelines, which means that the Kubernetes clusters and the pods running on them are managed automatically behind the scenes. This allows users to focus on building and deploying their machine learning models, without worrying about the complexity of the underlying infrastructure.

The serverless approach also offers a number of benefits. First, it allows for greater scalability and flexibility, as Vertex AI can automatically spin up and down Kubernetes clusters and pods as needed. Second, it offers improved performance and reliability, as Vertex AI automatically optimizes the underlying infrastructure for maximum efficiency and uptime. Finally, it simplifies the overall process of building and deploying machine learning models, making it easier for users to focus on the tasks that matter most.

Vertex AI Components

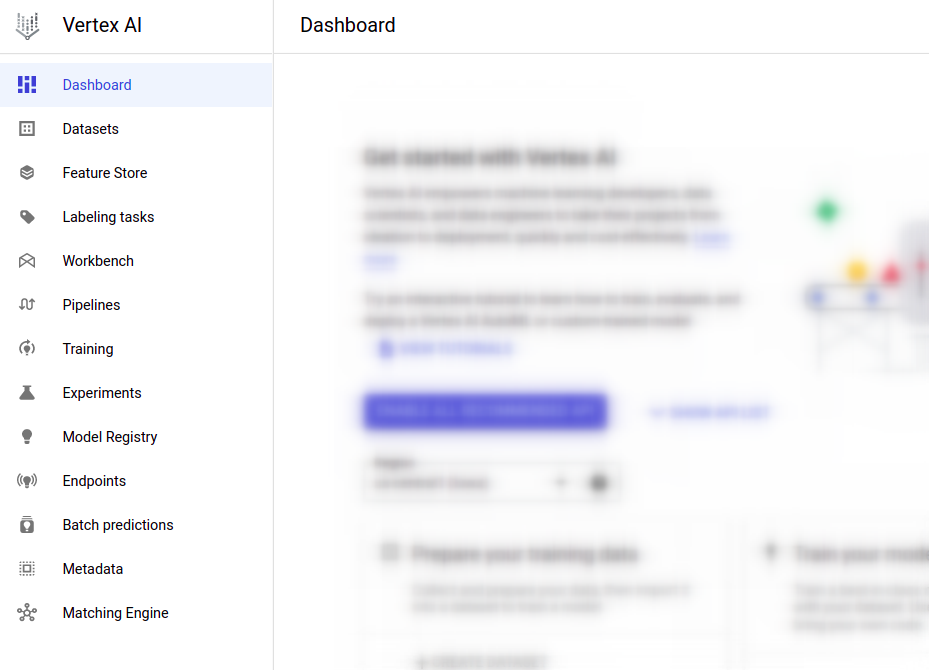

Dashboard

The Vertex AI Dashboard is a web-based interface that allows users to manage and monitor their machine learning projects on Vertex AI. The Dashboard provides an overview of all of the user's projects, along with details such as the project's status, the number of models and datasets, and the amount of data processed.

The Dashboard also provides access to a range of tools and services for building, training, and deploying machine learning models. This includes tools for data preparation, such as the Data Labeling Service and the Data Preprocessing API, as well as tools for model training and evaluation, such as the Training API and the Model Evaluation Service.

In addition to these tools, the Dashboard also provides access to other GCP services that are integrated with Vertex AI, such as BigQuery for data storage and analysis, Cloud Storage for data storage and retrieval, and TensorFlow for machine learning model training and deployment. This allows users to easily build end-to-end machine learning pipelines, from data preparation and model training to deployment and monitoring.

Datasets

Vertex AI Datasets is a service on Vertex AI that allows users to store and manage their datasets for use in machine learning projects. Vertex AI Datasets offers a range of features and capabilities, including support for various data formats and sources, automatic data labeling and preprocessing, and integration with other GCP services such as BigQuery and Cloud Storage.

With Vertex AI Datasets, users can easily import their datasets into the platform and store them in a central location. The service supports various data formats, including CSV, JSON, and TFRecord, as well as various data sources, such as Cloud Storage, BigQuery, and Cloud SQL. This allows users to easily access and use their datasets in their machine learning projects.

Vertex AI Datasets also offers automatic data labeling and preprocessing. The service can automatically label data using a range of techniques, such as object detection and natural language processing, and can also automatically preprocess data to extract relevant features and labels. This simplifies the process of preparing data for machine learning model training and allows users to focus on building and deploying their models.

In addition, Vertex AI Datasets integrates seamlessly with other GCP services, such as BigQuery for data storage and analysis and Cloud Storage for data storage and retrieval. This allows users to easily build end-to-end machine learning pipelines, from data preparation and model training to deployment and monitoring.

Feature Store

Vertex AI Feature Store provides a centralized repository for organizing, storing, and serving machine learning features. Features are pieces of information extracted from raw data that are used as input for training machine learning models. By using a central feature store, organizations can efficiently share, discover, and re-use machine learning features at scale, which can increase the speed at which new machine learning applications are developed and deployed.

Vertex AI Feature Store is a fully managed solution, which takes care of managing and scaling the underlying infrastructure such as storage and compute resources. This means that data scientists can focus on the feature computation logic without worrying about the challenges of deploying features into production.

Before using Vertex AI Feature Store, you may have computed feature values and saved them in different locations such as tables in BigQuery and files in Cloud Storage. You may also have built and managed separate solutions for storing and consuming feature values. In contrast, Vertex AI Feature Store provides a unified solution for batch and online storage as well as serving of machine learning features.

If you produce features in a feature store, you can quickly share them with others for training or serving tasks. Teams don't need to re-engineer features for different projects or use cases. Also, because you can manage and serve features from a central repository, you can maintain consistency across your organization and reduce duplicate efforts, particularly for high-value features.

Vertex AI Feature Store provides search and filter capabilities so that others can easily discover and reuse existing features. For each feature, you can view relevant metadata to determine the quality and usage patterns of the feature. For example, you can view the fraction of entities that have a valid value for a feature.

Labeling Tasks

Vertex AI Labeling Task is a feature of Vertex AI that allows users to label data for use in machine learning projects. The Labeling Task feature makes it easy for users to label data using a simple, intuitive interface, and can be used to label a wide range of data types, including images, text, and audio.

To use Vertex AI Labeling Task, users first need to create a labeling task and specify the data to be labeled. The Labeling Task feature supports a variety of data sources, including Cloud Storage, BigQuery, and Cloud SQL, and allows users to label data in various formats, such as CSV, JSON, and TFRecord.

Once the labeling task is created, users can invite other users to label the data. Vertex AI Labeling Task provides a simple, user-friendly interface for labeling data, and allows users to label data using a variety of techniques, such as object detection, text classification, and speech transcription. Users can also add comments and notes to the labeled data, and can review and approve labels submitted by other users.

Vertex AI Labeling Task also offers a range of tools and services for managing and monitoring labeling tasks, including the ability to view the progress and status of labeling tasks, as well as tools for analyzing and visualizing the labeled data. This allows users to easily track the progress of their labeling tasks and ensure that the labeled data is of high quality.

Workbench

Vertex AI Workbench is a platform that provides a Jupyter notebook-based environment for data science development. It allows users to interact with Vertex AI and other Google Cloud services within the notebook, and offers a range of integrations and features to help with tasks such as accessing data, speeding up data processing, and scheduling notebook runs. Vertex AI Workbench also offers two options for notebooks: a managed option with built-in integrations, and a user-managed option for users who need more control over their environment.

Pipelines

Vertex AI Pipelines is a tool that helps users automate, monitor, and govern their machine learning (ML) systems. It allows users to orchestrate their ML workflow in a serverless manner, and to store the artifacts of their workflow using Vertex ML Metadata.

Storing the artifacts of an ML workflow in Vertex ML Metadata can be useful for analyzing the lineage of those artifacts. For example, the lineage of an ML model might include the training data, hyperparameters, and code that were used to create the model.

To use Vertex AI Pipelines, users must first describe their workflow as a pipeline. ML pipelines are portable and scalable ML workflows that are based on containers. They are composed of a set of input parameters and a list of steps, each of which is an instance of a pipeline component.

ML pipelines can be used for a variety of purposes, including:

- Applying MLOps strategies to automate and monitor repeatable processes.

- Experimenting by running an ML workflow with different sets of hyperparameters, number of training steps or iterations, etc.

- Reusing a pipeline's workflow to train a new model.

Vertex AI Pipelines is compatible with pipelines that were built using the Kubeflow Pipelines SDK or TensorFlow Extended. This makes it a powerful and flexible tool for managing and deploying ML models.

Experiments

In Vertex AI, an experiment is a specific instance of training a machine learning model using a particular set of data and settings. When you create an experiment in Vertex AI, you specify the data you want to use for training, the machine learning algorithm and framework you want to use, and any specific hyperparameters or settings you want to use. You can then run the experiment to train the model, and Vertex AI will provide feedback and performance metrics to help you evaluate the model's accuracy and performance.

By creating multiple experiments with different data sets, algorithms, and settings, you can compare and contrast the results to determine which combination yields the best results for your specific use case. This allows you to fine-tune your machine learning model and improve its performance over time. Additionally, Vertex AI provides tools for tracking and organizing your experiments, so you can keep track of your progress and easily reproduce your results in the future.

Model Registry

The Vertex AI Model Registry is a feature that allows you to store and manage your trained machine learning models in a central location. This can be useful for keeping track of your models and their performance over time, as well as for sharing models with other users or deploying them to production environments.

When you train a model using Vertex AI, you have the option to save it to the Model Registry. This adds the model to your registry, where you can view its details, such as its performance metrics and the data and settings used to train it. You can also tag and organize your models, making it easy to find and use the models that are most relevant to your current project.

In addition to storing and organizing your models, the Vertex AI Model Registry also provides tools for versioning your models. This allows you to keep track of different versions of the same model, so you can easily compare their performance and choose the best one for your needs. You can also use the Model Registry to collaborate with other users, sharing models and providing feedback on their performance. Overall, the Model Registry is a useful tool for managing and deploying your trained machine learning models.

Endpoints

One of the components of Vertex AI is called Endpoints, which allows users to deploy trained models as REST APIs, making it easy for other applications to access and use the predictions generated by the model.

There are many potential use cases for Endpoints in GCP Vertex AI. For example, a company might use a machine learning model trained on customer data to predict which products a customer is likely to purchase, and then use Endpoints to deploy that model as a REST API that can be easily accessed by their e-commerce platform. This would allow the platform to make personalized product recommendations to customers in real-time.

Batch Predictions

Batch Predictions allow users to use trained models to make predictions on large datasets in batch mode.

Batch Predictions can be useful in many different scenarios. For example, a company might have a large dataset of customer data that they want to use to generate personalized product recommendations. In this case, they could train a machine learning model to predict which products a customer is likely to purchase, and then use Batch Predictions to run the model on the entire dataset and generate recommendations for all of the customers in the dataset. This could be done quickly and efficiently, allowing the company to make personalized recommendations to a large number of customers in a short amount of time.

Another potential use case for Batch Predictions in GCP Vertex AI is in the healthcare industry. A hospital might have a large dataset of patient records and want to use machine learning to identify patients who are at risk for a particular disease. In this case, they could train a model to predict which patients are at risk, and then use Batch Predictions to run the model on the entire dataset and identify all of the high-risk patients. This would allow the hospital to quickly and easily identify patients who need additional care and preventative measures.

Metadata

Metadata allows users to store and manage metadata associated with their machine learning models and datasets.

Metadata is important in the context of machine learning because it provides information about the data and models that are being used. This information can be used to understand how the data was collected, how the models were trained, and what the models are capable of.

In GCP Vertex AI, Metadata allows users to store and manage metadata associated with their machine learning models and datasets. This includes information such as the type of data that was used to train the model, the algorithms and hyperparameters that were used, and the performance of the model on various tasks.

Metadata can be useful in many different ways. For example, it can help users understand the limitations and capabilities of their machine learning models, which can be useful for debugging and improving performance. It can also be used to keep track of changes to models and data over time, which can be helpful for maintaining the integrity and reliability of machine learning systems.

Matching Engine

One of the components of Vertex AI is called the Matching Engine, which is a tool that can be used to match items in large datasets based on their similarity.

The Matching Engine in GCP Vertex AI uses machine learning algorithms to compare items in a dataset and determine how similar they are to each other. This can be useful in many different scenarios, such as matching customers with similar interests or preferences, or matching products that are similar to each other in terms of their features and characteristics.

One potential use case for the Matching Engine in GCP Vertex AI is in the e-commerce industry. A company could use the Matching Engine to match customers with similar interests and preferences, and then use this information to make personalized product recommendations. For example, the Matching Engine could be used to identify customers who have similar purchase histories or browsing habits, and then recommend products to those customers that are similar to the ones they have shown an interest in before.

Another potential use case for the Matching Engine in GCP Vertex AI is in the healthcare industry. A hospital could use the Matching Engine to match patients with similar medical conditions, and then use this information to provide more personalized care. For example, the Matching Engine could be used to identify patients who have similar symptoms or risk factors, and then recommend treatments or interventions that have been successful for other patients with similar profiles.

Author: Sadman Kabir Soumik